AI-powered penetration testing is rapidly emerging as the future of threat validation. While traditional methods have long served as a foundational control to understand how systems might be exploited, they fall short in current dynamic enterprise environments. Cloud-native deployments, hybrid IT/OT networks, and rapid code delivery cycles have outpaced conventional models making them inadequate. The problem isn’t that penetration testing has lost relevance, instead, it’s that its conventional execution model hasn’t evolved to match the complexity, dynamism, and scale of modern infrastructure.

Organizations still largely depend on scheduled engagements, typically conducted once or twice a year, that focus on a fixed scope of assets. These tests result in static reports outlining vulnerabilities and recommendations. But between the time a vulnerability is identified, and the time action is taken, environments often change. New assets emerge. Configurations drift. Attack surfaces expand. And somewhere in that gap, adversaries gain their foothold.

Why Traditional Testing Breaks Down in Modern Environments

In legacy models, penetration testing is scoped manually. Testers are handed a sanitized asset inventory and a narrow window for execution. The testing process is human-led, the reporting process static, and the remediation often disconnected from operational workflows. Valuable as these tests may be for compliance or external assurance, they don’t offer coverage for assets that are constantly changing or the nuanced risks that emerge from how systems interact in the real world.

Even the best traditional test can miss the forest for the trees. A misconfigured storage bucket might go unnoticed because it wasn’t in scope. An internal API vulnerable to privilege escalation may never be exercised. And complex exploit chains, those that require chaining together multiple lower-risk issues, are often left unexplored due to time constraints or lack of visibility.

Meanwhile, vulnerability scanners continue to flood security teams with unfiltered output: long lists of CVEs, many of which are unexploitable or irrelevant in their real-world context. This results in teams either burning time chasing phantom risks or ignoring findings altogether.

What’s needed is not just vulnerability discovery, but vulnerability validation and the ability to do so continuously, at scale, with attacker-level reasoning.

Why AI Makes Continuous, Context-Aware Penetration Testing Possible

Penetration testing, as traditionally practiced, assumes a slow-moving threat. You test once a year, run a few tools, get a report, and fix what you can. The problem is that environments change rapidly. New assets come online, legacy assets develop misconfigurations, and configurations drift. Attackers do not wait for your audit cycles.

This is where AI changes the rules. Instead of working off predefined scripts or sanitized inventories, AI-powered testing systems behave like active adversaries. They scan your infrastructure in real time, identify vulnerable patterns, and chain together multi-step attack paths based on the actual relationships between your systems.

Imagine this:

- A misconfigured S3 bucket is exposed to the internet.

- AI detects that it contains files with personally identifiable information based on filename heuristics.

- It identifies that the same storage bucket links to a backend API with insecure token handling.

- It simulates a chain of privilege escalation, leading to full access to the billing database.

This goes beyond CVE matching. It represents a contextual exploit path simulation that reflects how real attackers navigate systems.

What enables this?

- Large language models (LLMs) that interpret scan outputs and generate payload logic based on real-world threat scenarios.

- Graph reasoning engines that map trust relationships, user privileges, and application flows across cloud and on-prem systems.

- Adaptive learning models that prioritize findings based on the business impact, not just technical severity.

AI does not eliminate the need for red teams. Instead, it enables security teams to maintain adversarial pressure continuously. It removes the delay between discovery and validation. It eliminates blind spots that arise from static scoping or outdated asset lists.

The result is not just more testing. It is better testing, done more often, with a clearer view of what actually puts your business at risk.

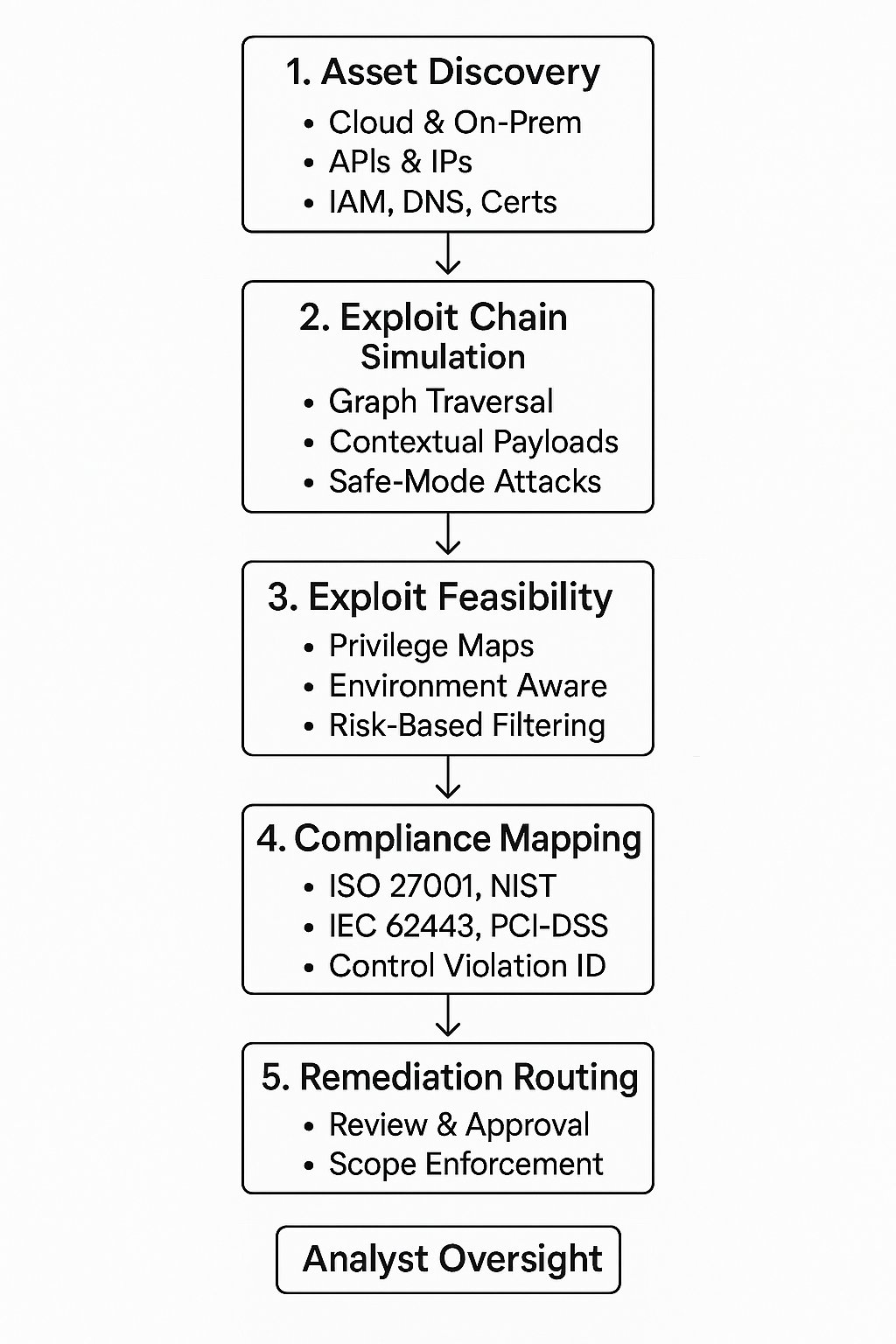

Architecture: Inside an AI Penetration Testing System

Beneath the promise of continuous, intelligent testing lies a tightly engineered architecture. AI-powered penetration testing platforms are not single-purpose scanners. They are modular systems built to simulate real adversarial behavior while staying aligned with enterprise-grade security, compliance, and operational constraints.

Each component has a distinct role, yet they function in a feedback-driven loop. The intelligence improves as the system learns more about the environment, its behaviors, and its vulnerabilities.

Here is a breakdown of the core components:

1. Asset Discovery and Attack Surface Mapping

The system begins with discovery, identifying everything that could be targeted, including external IPs, cloud assets, internal hosts, APIs, exposed services, and unmanaged devices.

Unlike conventional scanners that rely solely on IP range sweeps, AI-assisted discovery uses:

- Natural language models to interpret configuration files, cloud IAM roles, and documentation.

- DNS and certificate fingerprinting to find shadow assets and cloned domains.

- Passive reconnaissance techniques to minimize noise and avoid detection.

The goal is to build a living inventory that continuously reflects the organization’s true attack surface.

2. Exploit Chain Simulation

Once assets are mapped, the system begins simulating how an attacker could move through the environment. This involves:

- Graph traversal models that calculate feasible exploit paths.

- Contextual decision trees to test lateral movement, privilege escalation, and pivot scenarios.

- Use of safe payloads to test inputs without causing harm (e.g., DNS callbacks, dummy shell uploads).

Instead of stopping at individual vulnerabilities, the AI attempts to validate how those issues interact and escalate, modeling behavior, not just misconfigurations.

3. Exploit Feasibility Engine

Not all findings are exploitable. This module filters out theoretical risks and focuses on what an attacker could realistically achieve. It incorporates:

- Environmental data (e.g., software versions, patch history, service reachability).

- Privilege maps that estimate the blast radius of a compromise.

- Real-time decision models that adjust based on observed behavior during testing.

This helps avoid alert fatigue and allows defenders to prioritize the vulnerabilities that matter.

4. Compliance Mapping and Reporting

Every validated exploit path is automatically checked against applicable controls from frameworks such as:

- ISO/IEC 27001

- NIST Cybersecurity Framework

- PCI-DSS

- IEC 62443 (for OT systems)

Mapped reports do not just say “this asset is vulnerable.” They say “this finding violates control A.13.2.3 of ISO 27001” and provide remediation aligned with those frameworks.

This enables CISOs and auditors to tie technical risk to compliance impact without manual correlation.

5. Remediation Workflow Integration

Findings are routed into operational workflows through integration with tools such as:

- Jira, ServiceNow (ticketing)

- Splunk, QRadar, Sentinel (SIEMs)

- Prisma, Qualys, or Tenable (VM tools)

Each issue includes metadata on severity, affected systems, simulated exploit chains, and mapped controls. Security teams can validate or reject issues, assign owners, and track resolution over time all within existing systems.

AI in Action: Real-World Penetration Testing of a Hybrid IT/OT Environment

Scenario: A global manufacturing firm operates a mixed environment of legacy OT systems, modern cloud services, and internal enterprise apps. The security team initiates a safe-mode AI penetration test focused on internal systems and their exposure paths from internet-facing infrastructure.

Here’s how the AI engine simulated a full exploit chain in under 20 minutes.

Step 1: Reconnaissance Using GPT-Augmented Nmap

The engine begins by scanning a defined subnet range to discover exposed services. A GPT-powered parser is used to interpret scan results and prioritize potentially vulnerable hosts.

#GPT-Augmented Nmap Scanning

#Uses OpenAI GPT to prioritize hosts after scanning a subnet

import subprocess

import openai

def run_nmap(ip_range):

try:

result = subprocess.check_output(['nmap', '-sV', ip_range])

return result.decode()

except subprocess.CalledProcessError as e:

return f"Scan failed: {e.output.decode()}"

def analyze_scan(scan_output):

openai.api_key = "YOUR_API_KEY"

messages = [

{"role": "system", "content": "You are a cybersecurity analyst."},

{"role": "user", "content": f"Summarize and prioritize the following Nmap results:\n{scan_output}"}

]

response = openai.ChatCompletion.create(

model="claude-haiku-4.5",

messages=messages

)

return response['choices'][0]['message']['content']

scan_output = run_nmap("10.0.1.0/24")

print(analyze_scan(scan_output))

Host: 10.0.1.23

- Port 80: Apache 2.4.29 (Vulnerable to CVE-2017-15715)

- Port 22: OpenSSH 7.4 (Outdated, but no known remote exploit)

Recommended action: Upgrade Apache, disable directory listing.

Step 2: Simulating Exploit Chains via Prompt Engineering

The AI then builds an exploit chain using known weaknesses and asset relationships. This simulation is done in safe mode (no real payloads are deployed).

#Simulate Exploit Chain in Safe Mode using Prompt Engineering

prompt = """

System Details:

- Apache 2.4.29 with directory listing enabled

- Open upload directory: /var/www/html/uploads

- Internal ERP system reachable at 10.0.2.10

- Weak credentials found: admin:admin123

- OT network subnet: 10.0.3.0/24

Simulate a 4-step, safe-mode attack path that could reach the ERP system.

"""

response = openai.ChatCompletion.create(

model="gpt-4",

messages=[{"role": "user", "content": prompt}]

)

print(response['choices'][0]['message']['content'])

1. Upload a PHP reverse shell via the open directory on Apache.

2. Execute shell by accessing http://target/uploads/shell.php.

3. Enumerate internal network and identify ERP host (10.0.2.10).

4. Use default credentials to access ERP admin panel and extract data (read-only simulation).

Step 3: AI Output with Compliance Mapping

Once a simulated exploit is validated, the AI system generates output that includes the exploit chain, severity, affected controls, and remediation guidance.

{

"exploit_path": [

"CVE-2017-15715 exploited via Apache 2.4.29",

"Web shell deployed via open directory",

"ERP server discovered and accessed",

"Weak credentials reused to access admin panel"

],

"compliance_mapping": [

{"framework": "ISO/IEC 27001", "control": "A.12.6.1", "issue": "Insecure software configurations"},

{"framework": "IEC 62443", "control": "SR 5.2", "issue": "Insufficient authentication"}

],

"remediation": [

"Upgrade Apache to latest stable version",

"Disable directory listing and restrict uploads",

"Implement network segmentation between OT and ERP zones",

"Enforce strong credentials and 2FA on internal systems"

]

}

This output is used both for dashboards and for integration with tools like Jira, ServiceNow, or Splunk.

Optional: Structured API Output for CI/CD Integration

For teams running automated tests as part of their pipelines, the AI engine can return results in structured JSON. This allows seamless routing of high-priority risks to security engineers.

{

"type": "vulnerability",

"asset": "10.0.1.23",

"finding": "Directory traversal in Apache 2.4.29",

"exploitable": true,

"linked_issues": [

"Privilege escalation via weak ERP credentials",

"Lateral movement into OT subnet"

],

"severity": "High",

"ticket_created": true,

"ticket_id": "SEC-4281"

}

These structured outputs can also trigger rollbacks or testing gates in CI/CD pipelines when critical issues are detected.

Real-World Summary

The full simulation, run in safe mode, generated a prioritized set of findings. It exposed how a seemingly minor issue (an outdated Apache version with directory listing enabled) could escalate into unauthorized access of the ERP system and potentially pivot toward critical OT infrastructure.

Total time: 17 minutes Human oversight required: 1 analyst to review 3 findings Outcome: 2 Jira tickets filed, 1 compliance gap resolved

Human-in-the-Loop Testing: Ensuring Safe, Responsible AI Exploits

Despite the sophistication of AI-driven engines, penetration testing remains a high-stakes activity. One false positive could waste hours of remediation effort. One aggressive test in a production environment could trigger an outage. This is why responsible AI-powered testing requires a hybrid approach: automated where safe and repeatable, human-led where judgment is essential.

AI as Analyst, Not Arbitrator

The AI engine operates as a continuous adversary. It maps infrastructure, simulates lateral movement, and correlates risk across systems. However, it lacks contextual awareness of operational constraints, compensating controls, and organizational policy.

Human analysts bring the judgment required to these common review points:

Scope Approval

Analysts determine which systems may be tested and which actions are permitted. For example, simulations may be restricted to read-only operations in production environments.

Exploit Chain Verification

Before a finding is escalated, an analyst validates whether the exploit path is realistic. This includes checking if each hop in the chain is truly exploitable, reachable, and impactful.

Ticket Routing and Tagging

Once confirmed, validated findings are enriched and sent to remediation teams using structured metadata and appropriate severity classification.

Code Example: Analyst-Guided Ticket Creation

This Python snippet shows how an AI-generated finding is paused for review before ticket submission.

#Human-In-The-Loop Validation Before Ticket Creation

#Simulated AI-generated finding

ai_finding = {

"asset": "10.0.2.15",

"vulnerability": "Weak admin credentials on ERP login",

"exploit_path": ["Web portal access", "Credential reuse", "Database exposure"],

"risk_rating": "High"

}

#Human analyst review step

def validate_finding(finding):

if finding["risk_rating"] == "High":

print("Manual review required before ticket creation.")

# Analyst inspects details via dashboard or CLI

decision = input("Approve finding? (yes/no): ")

return decision.lower() == "yes"

return True

#Ticket creation function (e.g., integration with Jira)

def create_ticket(finding):

print(f"Creating ticket for: {finding['vulnerability']} on {finding['asset']}")

# Logic to push to ticketing platform goes here

if validate_finding(ai_finding):

create_ticket(ai_finding)

else:

print("Finding rejected by analyst.")

This ensures that no high-severity ticket is created without human confirmation, especially when exploit simulation involves sensitive business systems.

When Human Oversight is Essential

AI is extremely effective at discovering technical possibilities. However, only a human analyst can determine whether an exploit path is:

- Logically sound in the context of the architecture

- Likely to be abused based on threat intelligence

- Relevant to compliance, policy, or real-world attacker behavior

This hybrid approach prevents false positives, avoids testing-related disruptions, and aligns testing with enterprise risk tolerance.

Safe Execution During Simulated Attacks

Simulating attacker behavior across live infrastructure requires surgical precision. While traditional testing often relies on manual boundaries and best-effort coordination, AI-driven testing introduces automated decision-making into environments that cannot afford downtime.

This makes execution safety a critical architectural pillar.

Execution Modes Designed for Risk Isolation

Most AI penetration testing engines are built to support multiple execution modes, each aligned with a specific level of operational tolerance:

- Passive Mode: No payloads are sent. The system analyzes metadata, configurations, DNS records, SSL/TLS protocols, and exposed services without generating traffic that could trigger alarms or system instability.

- Safe Mode (Default): Payloads are crafted to simulate exploitation but never execute destructive or state-changing actions. Shells are inert. Scripts are harmless. Authentication attempts use known credentials, never brute-force.

- Active Mode: Enabled only in controlled environments such as staging or red-teaming zones. The system is allowed to attempt privilege escalation, test real exploit chains, and validate persistence techniques.

These modes are enforced programmatically using execution policies defined by security teams.

Sample Configuration: Mode Enforcement Policy

The following YAML configuration defines testing constraints based on asset group and environment:

environments:

production:

mode: safe

allow_write: false

allow_authentication_attempts: true

exclude_services:

- scada

- backup_servers

staging:

mode: active

allow_write: true

allow_authentication_attempts: true

test_lab:

mode: active

allow_write: true

allow_network_scanning: true

This configuration ensures that:

- In production, only non-invasive simulations are allowed.

- SCADA and backup systems are completely excluded.

- Staging zones allow write operations for deeper validation.

- Internal test labs offer full execution freedom for AI engines to train, iterate, and validate high-risk behavior without constraints.

Runtime Guardrails and Rollbacks

In addition to static policies, runtime safety controls are applied:

- Kill switches can halt all tests instantly across tenants or environments.

- Execution caps limit the number of simulated exploits per session.

- Auto-pause triggers monitor for unexpected system responses and suspend testing when anomalies such as high CPU load or network saturation are detected.

This provides an additional layer of control, even if policies are misconfigured or environments change mid-test.

Example: Controlled Simulated Payload Deployment

Here’s a basic simulation that uploads a test script but performs no real action. The payload is inert and flagged as such.

simulated_payload = {

"target": "10.0.2.21",

"type": "web_shell",

"content": "",

"mode": "simulate",

"execution_flag": False,

"callback_url": None

}

def deploy_payload(payload):

if payload["mode"] == "simulate" and not payload["execution_flag"]:

print(f"Uploading test payload to {payload['target']} (dry run only)")

# Log action, but skip actual deployment

else:

raise ValueError("Unsafe payload configuration")

deploy_payload(simulated_payload)

This confirms that the payload will be logged for validation but never executed. It allows the AI system to trace and validate exploit paths without putting systems at risk.

AI-Powered Pen Testing in 5 Key Gains

- Continuously maps and validates exploit chains across hybrid environments.

- Uses safe-mode simulation to avoid production impact.

- Integrates with Jira/Splunk for real-time remediation.

- Automatically aligns findings to ISO, IEC 62443, NIST controls.

- Human oversight ensures responsible, targeted action.

Conclusion: Making the Shift to AI-Powered Penetration Testing

AI-powered penetration testing is not a replacement for skilled human red teams, it is an operational multiplier. By simulating attacks continuously, at scale, and with contextual intelligence, AI systems close the gaps that traditional penetration tests leave behind.

In modern environments where infrastructure changes by the hour, static testing cannot keep up. Asset sprawl, ephemeral services, misconfigurations, and privilege paths evolve rapidly. Without continuous validation, risk assessments become outdated before remediation even begins.

AI systems enable organizations to:

- Discover vulnerabilities in real time

- Chain issues into realistic attack paths that matter

- Validate what is exploitable, not just what is detectable

- Map findings to compliance controls automatically

- Trigger remediation workflows within existing ticketing and security tools

But the power of AI also demands discipline. Safe testing zones, well-defined policies, approval workflows, and compliance boundaries are essential. Responsible deployment is what transforms a technical capability into an enterprise-grade control.

Glossary

- ISO/IEC 27001 – International standard for managing information security

- IEC 62443 – Cybersecurity standard for industrial control systems (ICS)

- NIST – National Institute of Standards and Technology cybersecurity frameworks

- PCI-DSS – Security standard for protecting cardholder data

- CVE – Common Vulnerabilities and Exposures

- SCADA – Supervisory Control and Data Acquisition systems

- IAM – Identity and Access Management

- SIEM – Security Information and Event Management

- CI/CD – Continuous Integration / Continuous Delivery

- VM tools – Vulnerability Management tools